In the first article of this series, we’ve tackled some definitions, illustrated what we consider AI and how we’re going about in making the EHE -our technology and soon middleware- learn and evolve.

In the second article, we’ve illustrated how personality and emotions could be used to color perceptions of the world as well as the reactions we have to various situations. By including that in our technology, we’re making entities that are much more believable and much more human.

Two problems that were identified in the first article start to appear, though: unpredictability and the limitations of a closed system. Even if we do a very good job at creating entities that are dynamic and evolving, our world -the computer game in which this technology lives- is behaving according to fixed rules. Even if the data of the world evolves, the reality of the game is finite, and thus the possibility of the entity to learn is bound by that limitation.

It becomes a problem when, for some freak reason, the AI starts to learn the “wrong” thing. It happens a lot, and we usually blame the human player for that.

Why?

Because we, humans, play games for various reasons, not all of them logical. Sometimes, we just want to see what will happen if we try to do something crazy like that. Sometimes we want to unlock a specific goody, cinematic, prize, etc. Sometimes we’re just finding a specific animation funny and want to see it again and again. Also, a human player can suck at the game, especially at first. What happens then? How is the AI supposed to learn and adapt based on the reality of the world under which it’s thrown when the parameters of that reality are not realistic themselves?

We made a game a while back called Superpower 2 (re-released in 2014 on Steam; see our “Games” 🙂 ). In this game, the player would select a country and play it in a more detailed version of Risk, if you will. But sometimes, the player would just pick the US and nuke Paris, for no particular reason. Absolutely nothing in the game world explained that behavior, but here we were anyway. The AI was, in situations like this one, understandably becoming confused, and reacting outside the bounds of where we had envisioned it, because the player itself was feeding the confusion. The EHE would then learn the wrong thing, and in subsequent games would confront the player under these parameters that were wrong in the first place, causing further confusion for the players.

The memory hub (or Borg) comes in to help alleviate these situations. Let’s look at it, using again traditional human psychology.

The human race is a social one, and it’s one of the reasons explaining our success. We work together, learn from one another, and pass on that knowledge from generation to generation. That way, we don’t always reset knowledge with each new generation, and become more and more advanced as time passes. We also use these social interactions to validate each other to conform into a specific “mold” that varies from society to society and evolves with time. A specific behavior that was perfectly fine a generation ago could get you in trouble today, and vice-versa. That “mold” is how we define normalcy – the further you are from the center, the “weirder” you become. If some weirdness in you makes you dangerous to yourself (if you like to eat chairs, for example) or to others (you like to eat other people), then society as a whole intervenes to either bring you back towards the center (“stop doing that”) or, in cases where it’s too dangerous, to cut you away from society. The goal is always the same, even if we celebrate each other’s individuality and quirky specifics. We still need to be consistent with what is considered “acceptable behavior”.

These behaviors are what society determined what “works” to keep it functional and relatively happy. Just a couple of decades ago, gays could be put in prison for being overtly… well… gay. This was how a majority of people thought, and their reasoning was logical, back then: gays were bad for children; this is how God wants it, etc. These days, the pendulum is changing direction: the majority of people don’t see gays as a danger to society any longer, as more civil rights are now given to them. This is how society works best to solve its problems because it is what a majority of people came to accept as being normal. Back in the 1950’s, someone who said there was nothing wrong with being gay would’ve been viewed as outside the mainstream. Today, such an opinion is considered the norm.

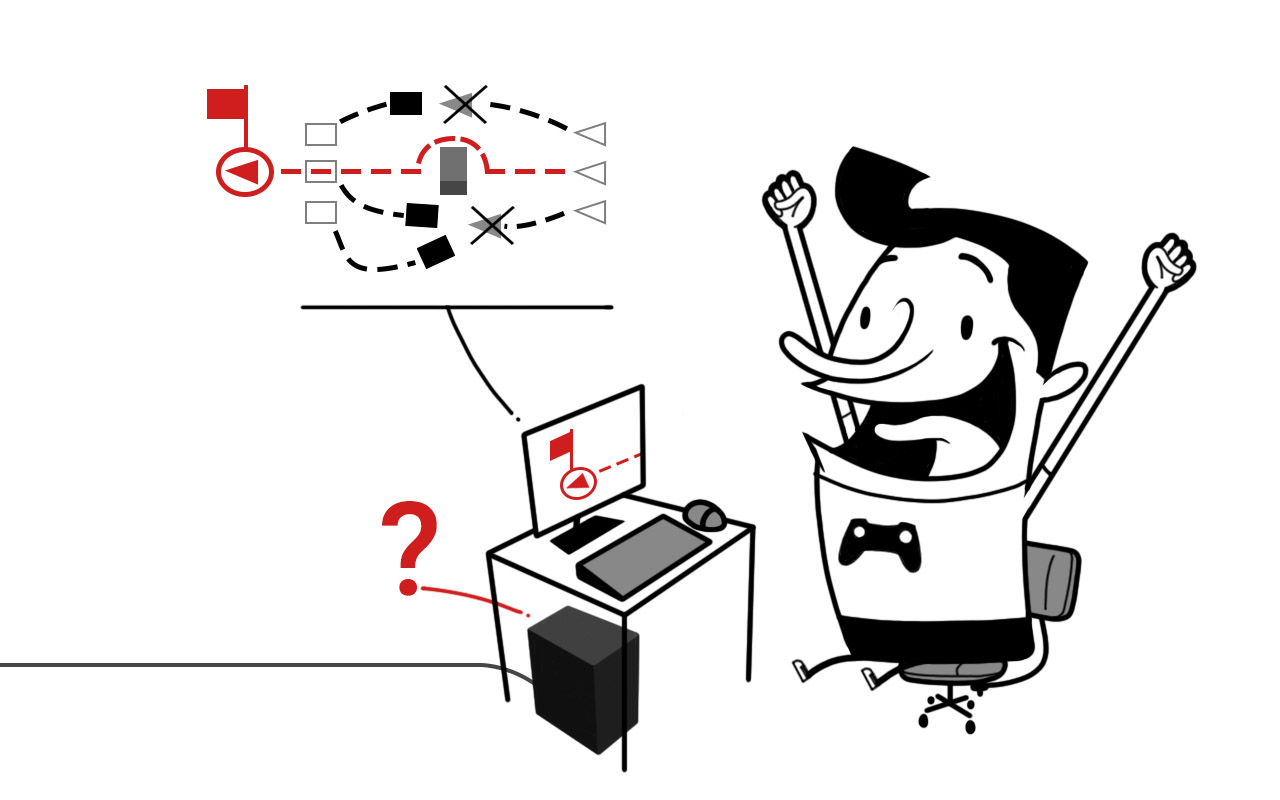

The memory hub thus serves as a sort of aggregate of the conditions of what works for every EHE entity. Imagine a game coming out powered by the EHE. That game would be connected to the memory hub and remain connected to be validated and updated based on the shared knowledge of thousands of other players around the world.

The hub would be used to do multiple things:

- Upon initial boot, and periodically after that, the AI would be updated with the latest logic, solutions and strategies to better “win”, based on the experience of everyone else who has played so far.

- Your EHE learns individually based on your reality, but it always checks in to validate that its memory matrix isn’t too far off course. When the particular cases of a player (like the nuking players mentioned above) mean that their EHE becomes confused, it can reassure itself with the mother ship and disregard knowledge that would have been proven “wrong”.

- When the particular experience of an EHE makes it efficient at solving a specific problem -because a player confronted it in a specific fashion, for instance-, the new information can be uploaded to the hub and shared back to the community.

So, while the game learns by playing against you, and adapts to your style of playing, it also shares the knowledge of thousands of other players around the world. This knowledge means that an EHE-powered game connected to the hub would have tremendous replay value, as the game’s AI is continually evolving and adapting to the game’s circumstances and experience of play.

The hub, viewed as a social tool, can also be very interesting. How would the community react to a specific event, or trigger? Are all players worldwide playing the same way? All age groups…? Studying the memory hub based on how it evolves and reacts would be a treasure trove of information for future games, but also for the game designers working on a current game: if an exploit arises or a way to “cheat” is found, the memory hub will learn about it very quickly, thus being able to warn game designers what players are doing, how they’re beating the game or, on the other side, how the game is always beating them under determined conditions, thus guiding the designers to adjust afterwards, almost in real time, based on real play data.

In the end, we propose the Borg not only as a safeguard against bad memory, but as a staging ground for better learning, and better dissemination of what is being learned for the shared benefice of all players.

As always, we welcome your questions, comments, and thoughts on this or any of our articles.